Modeling Social Engineering Risk using Attitudes, Actions, and Intentions Reflected in Language Use

Adam Dalton, Alan Zemel, Amirreza Masoumzadeh, Archna Bhatia, Bonnie Dorr, Brodie Mather, Bryanna Hebenstreit, Ehab Al-Shaer, Ellisa Khoja, Esteban Castillo Juarez, Larry Bunch, Marcus Vlahovic, Peng Liu, Peter Pirolli, Rushabh Shah, Sabina Cartacio, Samira Shaikh, Sashank Santhanam, Sreekar Dhaduvai, Tomek Strzalkowski, Younes Karimi [ordered in alphabetical order]

Poster in 32nd International FLAIRS Conference, Florida, USA, May 2019

Abstract

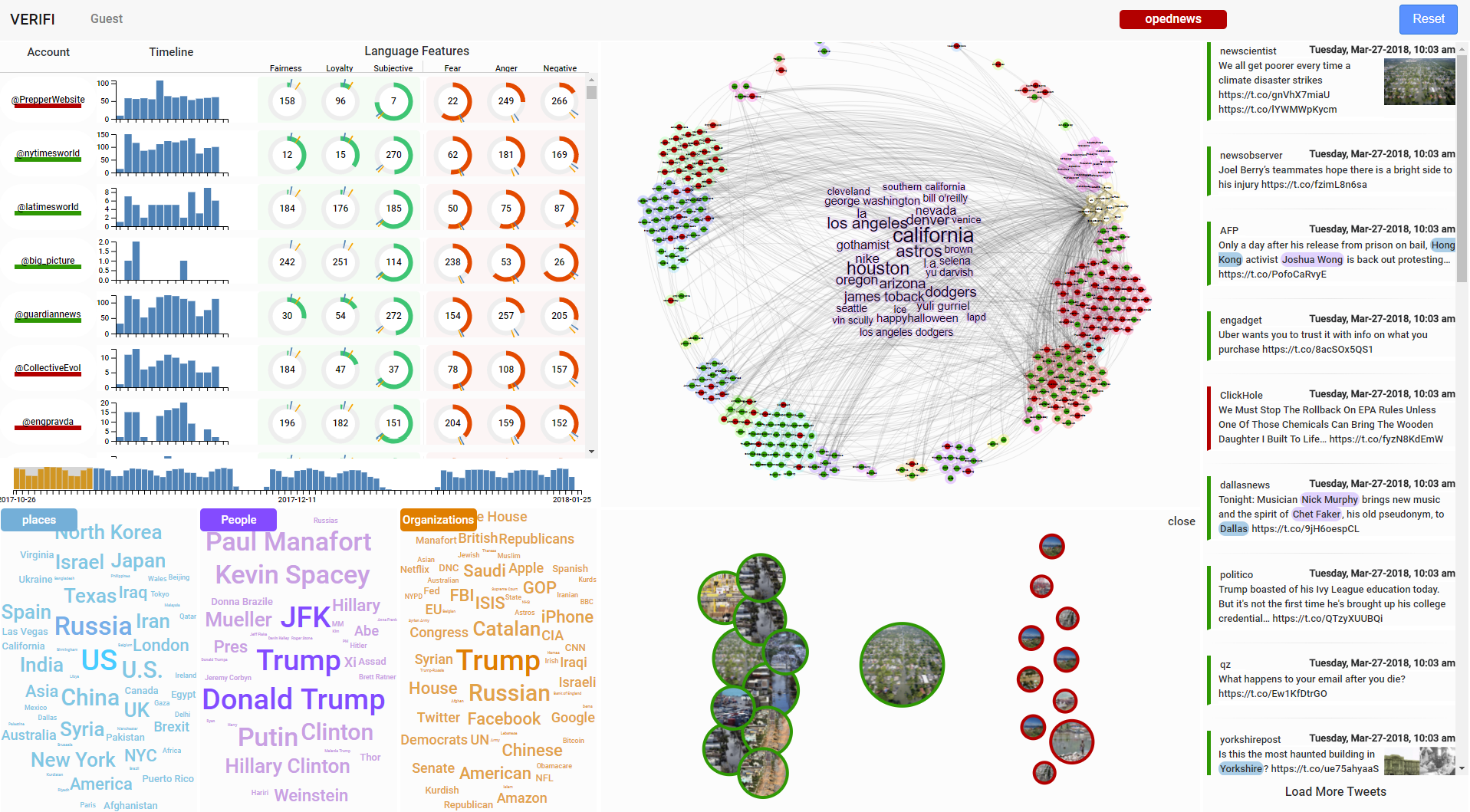

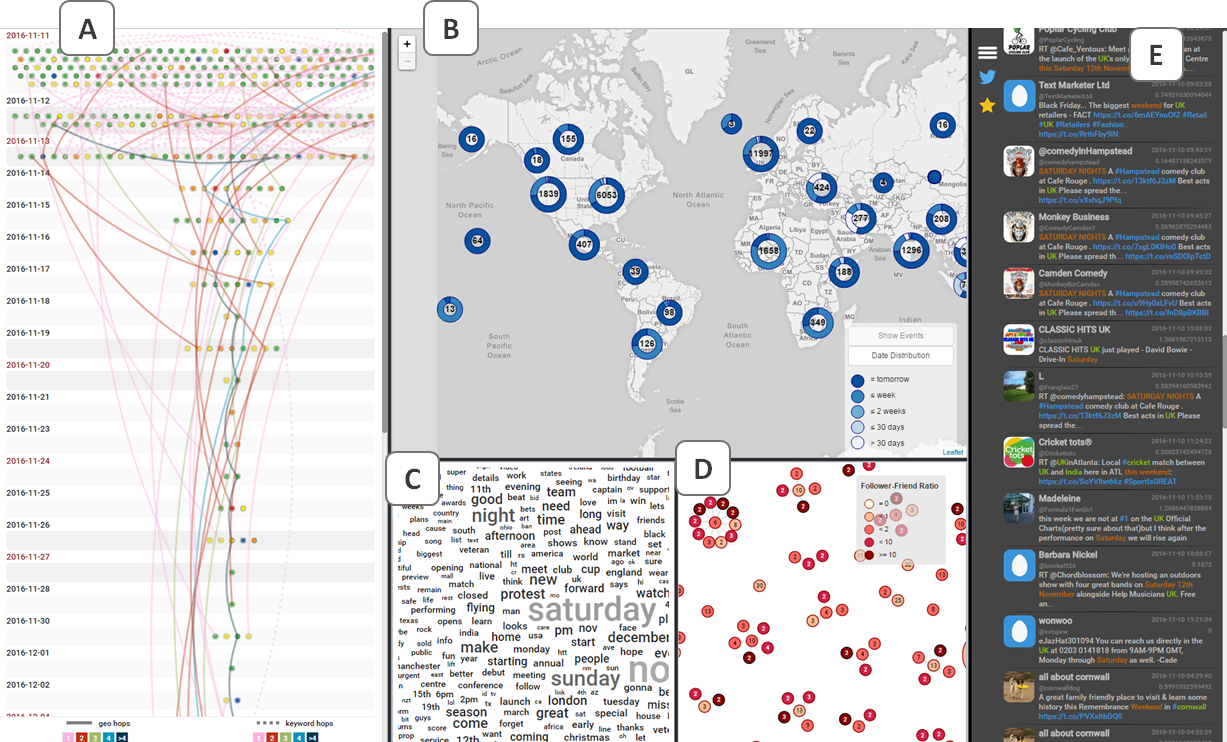

Social engineering attacks are a significant cybersecurity threat putting individuals and organizations at risk. Detection techniques based on metadata have been used to block such attacks, but early detection success is minimal. Natural language processing and computational sociolinguistics techniques can provide a means for detecting and countering such attacks. We adopt the view that actions and intentions of adversaries, which we call "asks" in social engineering attacks can be gleaned from the adversaries' language use during communication with targets. Timely recognition and understanding of these asks can facilitate deployment of proper safeguards, thus enabling simultaneous engagement of the adversaries in ways that compel them to divulge information about themselves. Attitudes that the adversaries attempt to induce in their targets for easy compliance can also be identified proactively to compute social engineering risk. These identified attitudes can be used to help the target in making the right decisions. Moreover, the techniques used for detection can also be used to induce attitudes in the adversaries. We present a model of social engineering risk that uses knowledge of actions, attitudes induced, and intentions embedded in the dialogue between an adversary and a target user. Informed by such a model, we are building a platform for Personalized AutoNomous Agents Countering Social Engineering Attacks (PANACEA). The platform informs users about social engineering risk situations and can automatically generate responses to engage the adversary and protect the user.